Last week a question came up on Stack Overflow about determining whether a variable is distributed normally. Some of the answers reminded me of a common and pervasive misconception about how to apply tests against normality. I felt the topic was general enough to reproduce my comments here (with minor edits).

Misconception: If your statistical analysis requires normality, it is a good idea to use a preliminary hypothesis test to screen for departures from normality.

Shapiro’s test, Anderson Darling, and others are null hypothesis tests against the the assumption of normality. These should not be used to determine whether to use normal theory statistical procedures. In fact they are of virtually no value to the data analyst. Under what conditions are we interested in rejecting the null hypothesis that the data are normally distributed? I, personally, have never come across a situation where a normal test is the right thing to do. The problem is that when the sample size is small, even big departures from normality are not detected, and when your sample size is large, even the smallest deviation from normality will lead to a rejected null.

Let’s look at a small sample example:

> set.seed(100)

> x <- rbinom(15,5,.6)

> shapiro.test(x)

Shapiro-Wilk normality test

data: x

W = 0.8816, p-value = 0.0502

> x <- rlnorm(20,0,.4)

> shapiro.test(x)

Shapiro-Wilk normality test

data: x

W = 0.9405, p-value = 0.2453

In both these cases (binomial and lognormal variates) the p-value is > 0.05 causing a failure to reject the null (that the data are normal). Does this mean we are to conclude that the data are normal? (hint: the answer is no). Failure to reject is not the same thing as accepting. This is hypothesis testing 101.

But what about larger sample sizes? Let’s take the case where there the distribution is very nearly normal.

> library(nortest)

> x <- rt(500000,200)

> ad.test(x)

Anderson-Darling normality test

data: x

A = 1.1003, p-value = 0.006975

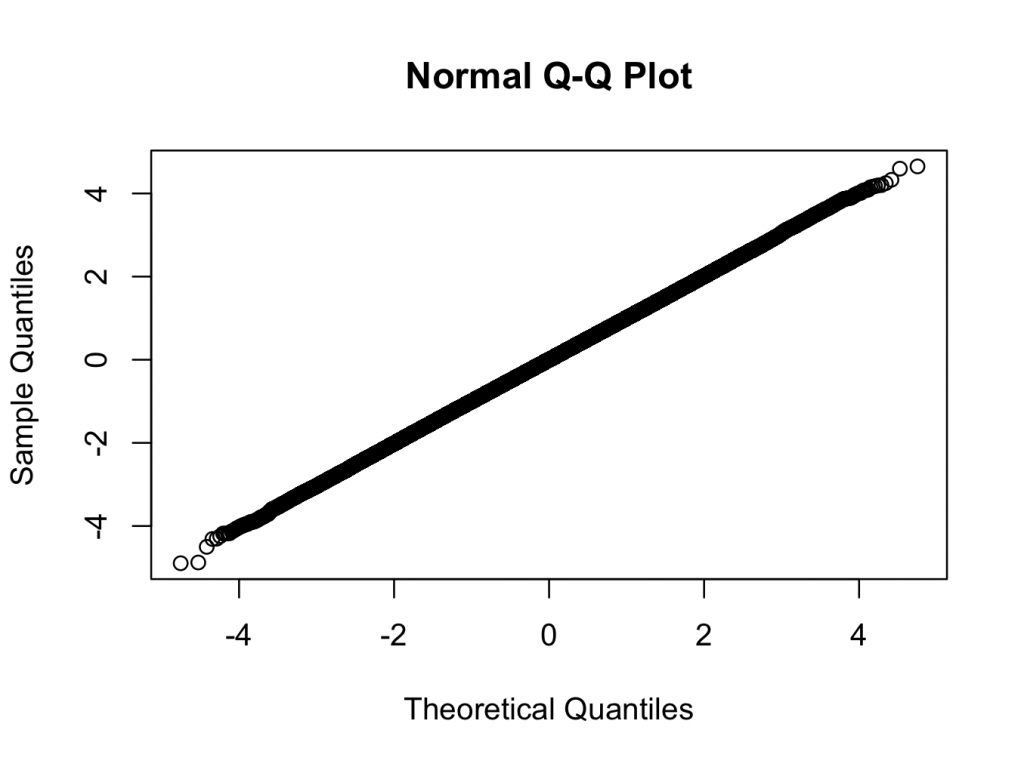

> qqnorm(x)

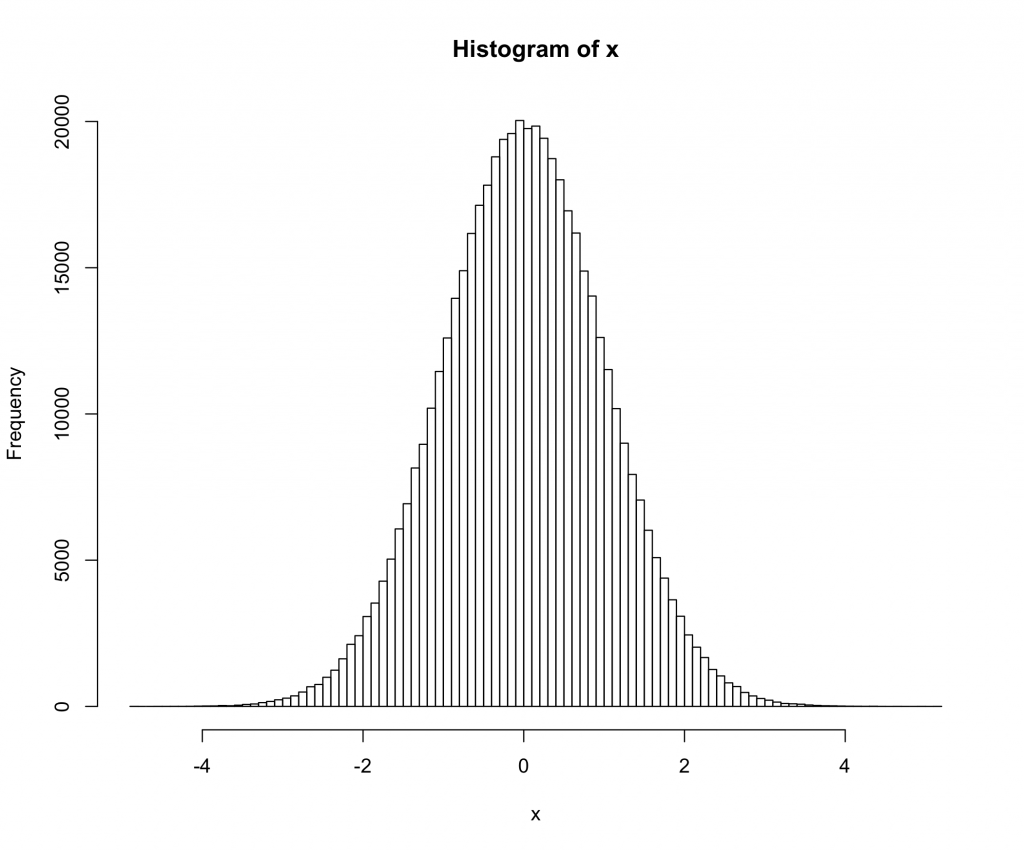

> hist(x,breaks=100)

Here we are using a t-distribution with 200 degrees of freedom. The qq and histogram plots show the distribution is closer to normal than any distribution you are likely to see in the real world, but the test rejects normality with a very high degree of confidence.

Does the significant test against normality mean that we should not use normal theory statistics in this case? (Another hint: the answer is no 🙂 )